Table of Contents

PC running slow?

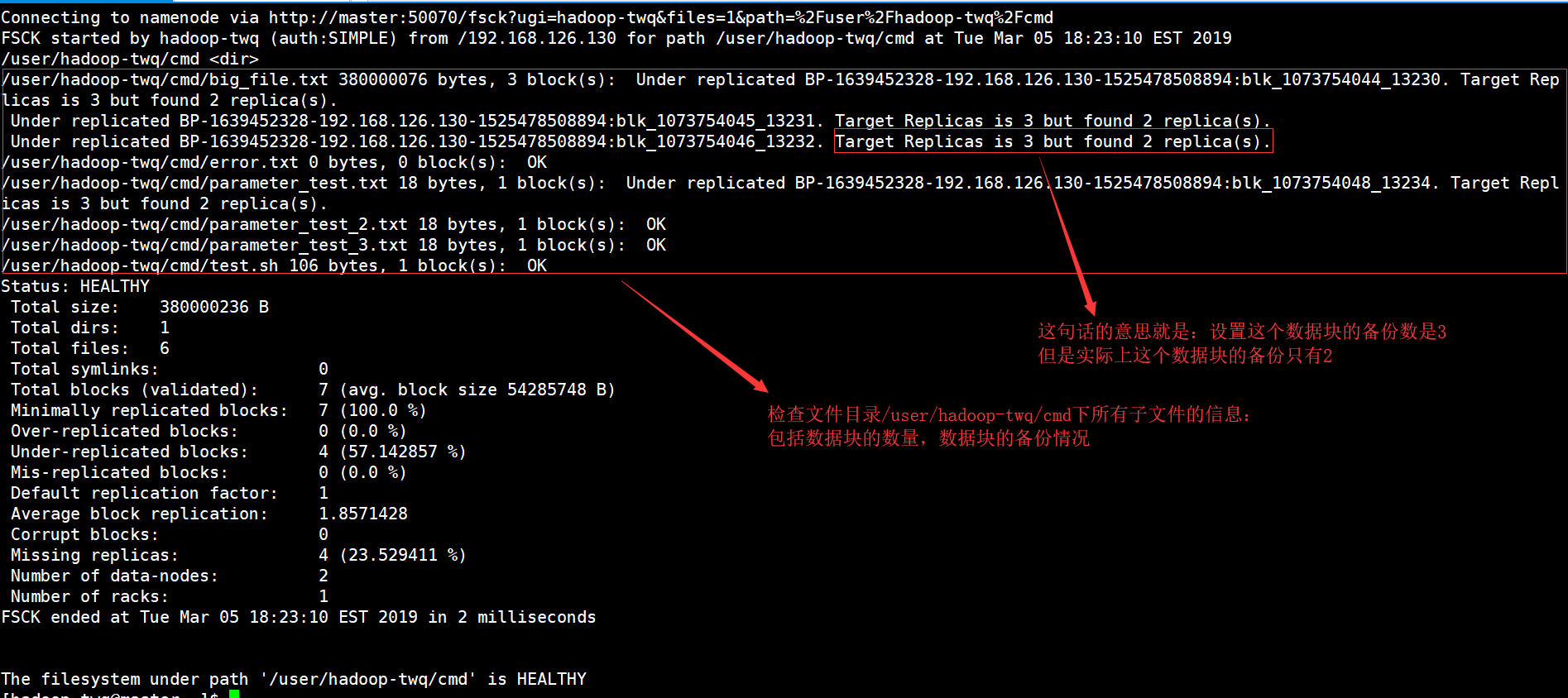

An error may occur indicating that the hadoop file system has been verified. Now there are various steps you can take to fix this issue and we will do that shortly. Run the fsck command from namenode as $HDFS_USER: su Hdfs – -g “hdfs fsck -files / -blocks -websites > dfs-new-fsck-1.log”Start the hdfs namespace with the link file.Compare the namespace relationship before and after the update.Check these reads and writes to make sure Hdfs is working properly.

What is HDFS fsck command?

The fsck it is sold for is used to test the nature of various inconsistencies in an HDFS file system or an hdfs region. The HDFS file system is used to actually test the health of system documents. We know how to find a replicated ban, missing files, previously corrupted blocks, replicated block, etc.

Hadoop FS Command Line

Hadoop FS Command Line is an easy to use access and interface to HDFS. Below are some basic HDFS commands including Ubuntu, operations such as creating directories, moving files, deleting files, reading files and browsing directories.

HDFS File System Commands

Apache Hadoop has a simple but basic command interface, a simple interface to access a particular underlying Hadoop distributed file system. In this section, we present the most basic and useful HDFS file system commands currently available that match or resemble the UNIX file system requirements. After starting the Hadoop daemons, the UP and Running commands should use the available HDFS file system. File system surgical processing such as creating directories, moving files, adding MP3 files, deleting files, playing files, and browsing folders can be easily done on the same system.

How HDFS Differs From “regular” File Systems

Although HDFS is considered a file system, it should be understood that itrather than traditional POSIX file systems. HDFS was designed for this unique workload, MapReduce, and does not support general workloads. HDFS also supports write-once, meaning files can be extended once and then read-only. This can be described as an ideal solution for Hadoop and other fairly large data analysis applications; However, you still couldn’t run a transactional database or even virtual machines on HDFS.

Checking Specific Information

If we want to see all defined song types in the fsck report, we need to start using the grep command in the fsck report.If we only want to see the replicated blocks below, I really need to grep like below.hdfs fsck and -files -blocks -locations |grep -i Replicated”/data/output/_partition “less than 297 first bytes, 1 block(s): Subreplication BP-18950707-10.20.0.1-1404875454485:blk_1073778630_38021. 10 target replicas, but document replicas found.We can replace replicated files with infected files to see corrupted files.

4. Working With The File System Hadoop

The Hadoop file system, HDFS, can be used in a variety of ways. This segment discusses the most common protocols for interacting with HDFS, as well as their advantages and disadvantages. SHDP hardly uses protocolcan be used – in fact, with respect to the implementation described in this section, any file system can be used, allowing even non-HDFS implementations to be used.

PC running slow?

ASR Pro is the ultimate solution for your PC repair needs! Not only does it swiftly and safely diagnose and repair various Windows issues, but it also increases system performance, optimizes memory, improves security and fine tunes your PC for maximum reliability. So why wait? Get started today!

Example 4: Hadoop Count Word Using Robust Python

Even the Hadoop situation is written in Java, map/reduce programs will be developed in other languages such as Python or C++. This example shows how to run the simple word count example again, i.e. using a converter/reducer developed in Python.

How can I check my hadoop data?

First view some HDFS data with Control cat. -cat $ $hadoop_home/bin/hadoop fs /user/output/outfile. WholeGet a file from HDFS to an adjacent file system using the get command. ppp $HADOOP_HOME/bin/hadoop fs /user/output/ -get /home/hadoop_tp/

HPC: Hadoop Distributed File System (HDFS) Tutorial

MapReduce is Google’s software platform for writing applications easily.This method allows you to place large amounts of data in parallel in clusters.The MapReduce calculation to solve the problem consists of two types of tasks:

Watch This Full Course On Big Data And Hadoop – Learn Hadoop In 12 Hours!

You can do almost anything, when Hadoop distributed file systems start up, you can start a local file system. You have the ability to perform various read and write operations such as creating a directory, granting permissions, stopping files, updating files, deleting, etc. Anyone can add permissions and browse the cluster access folder system to get information such as , about the number of dead nodes, worker nodes, used disk space, etc.

HDFS File List

After uploading the information to the server, we were able to find a list of files in the correct directory, status file, resulting in “ls”. The following is the Mark vii syntax that can be passed as a collection or filename argument.

Checking HDFS Disk Usage

All throughout the book, I show information on how to use various commands HDFS in their valuable and correct context. Let’s take a look at some space-hdfs and file related commands here. You can probably gethelp for any HDFS file command by typing the main command first:

What is checksum in hadoop?

Checksum property to reflect defaults on 512 bytes.Blockscale in most . crc file so that the image can be played correctly when the bit-size setting is changed. Checksums are displayed when the file is read, and if an error is subsequently encountered, the LocalFileSystem throws a ChecksumException.

Improve the speed of your computer today by downloading this software - it will fix your PC problems.Hoe Problemen Op Te Lossen Met Hadoop-bestandssysteem Onderzoeken Problemen

Como Solucionar Problemas De Verificação Do Sistema De Arquivos Hadoop

So Beheben Sie Probleme Beim Auschecken Des Hadoop-Dateisystems

Cómo Solucionar Problemas De Comprobación Del Sistema De Archivos De Hadoop

Hadoop 파일 시스템 체크아웃 문제를 해결하는 방법

Как устранить неполадки файловой системы Hadoop, посмотреть на проблемы

Comment Résoudre Les Problèmes De Navigation Du Système De Fichiers Hadoop

Come Risolvere I Problemi Di Verifica Del Filesystem Hadoop

Så Här Felsöker Du Problem Med Utcheckning Av Hadoop Filsystem

Jak Rozwiązywać Problemy Z Pobieraniem Systemu Plików Hadoop